Snowflake

Steps to add a Snowflake data warehouse as a data destination

PrerequisitesYour Snowflake user must be granted securityadmin and sysadmin roles to complete the next step. To verify these roles, run SHOW GRANTS TO USER <your_username>; and review the role column.

Step 1: Create role, user, warehouse, and database in the data warehouse

- Review and make any changes to the following SQL setup script.

begin;

-- create variables for user / password / role / warehouse / database

set role_name = 'TRANSFER_ROLE';

set user_name = 'TRANSFER_USER';

set user_password = 'some_password';

set warehouse_name = 'TRANSFER_WAREHOUSE';

set database_name = 'TRANSFER_DATABASE';

-- change role to securityadmin for user / role steps

use role securityadmin;

-- create role for data transfer service

create role if not exists identifier($role_name);

grant role identifier($role_name) to role SYSADMIN;

-- create a user for data transfer service

create user if not exists identifier($user_name)

password = $user_password

default_role = $role_name

default_warehouse = $warehouse_name;

grant role identifier($role_name) to user identifier($user_name);

-- change role to sysadmin for warehouse / database steps

use role sysadmin;

-- create a warehouse for data transfer service

create warehouse if not exists identifier($warehouse_name)

warehouse_size = xsmall

warehouse_type = standard

auto_suspend = 60

auto_resume = true

initially_suspended = true;

-- create database for data transfer service

create database if not exists identifier($database_name);

-- grant service role access to warehouse

grant USAGE

on warehouse identifier($warehouse_name)

to role identifier($role_name);

-- grant service access to database

grant CREATE SCHEMA, MONITOR, USAGE

on database identifier($database_name)

to role identifier($role_name);

commit;

NOTE: Using an existing warehouse or databaseBy default, this script creates a new warehouse and a new database. If you'd prefer to use an existing warehouse/database, change the warehouse_name variable from TRANSFER_WAREHOUSE to the name of the warehouse to be shared and the database_name variable from TRANSFER_DATABASE to the name of the database to be shared.

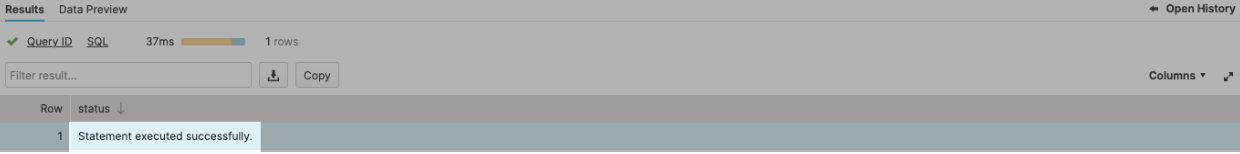

- In the Snowflake interface, select the All Queries checkbox, and click "Run". This will run every query in the script at once. If successful, you will see Statement executed successfully in the query results.

Step 2: Configure the Snowflake access policy

If your Snowflake data warehouse is using Snowflake Access Policies, a new policy must be added to allow the transfer service static IP to write to the warehouse.

- Review current network policies to check for existing IP safelists.

SHOW NETWORK POLICIES; - If there is no existing Snowflake Network Policies (the SHOW query above returns no results), you can skip to Step 3.

- If there is an existing Snowflake Network Policy, you must alter the existing policy or create a new one to safelist the data transfer service static IP address. Use the CREATE NETWORK POLICY command to specify the IP addresses that can access your Snowflake warehouse. The IP addresses to whitelist is 35.192.85.117.

CREATE NETWORK POLICY <transfer_service_policy_name> ALLOWED_IP_LIST = ('35.192.85.117/32');

NOTE: Creating your first network policyIf you have no existing network policies and you create your first as part of this step, all other IPs outside of the ALLOWED_IP_LIST will be blocked. Snowflake does not allow setting a network policy that blocks your current IP address. (An error message results while trying to create a network policy that blocks the current IP address.) But be careful when setting your first network policy.

Step 3: Connect Snowflake to Fairing!

Use the form provided by Fairing to connect your Snowflake account.

- The host is the same as the URL you use to access your Snowflake instance, without the https:// section (for example, gb044444.us-central1.gcp.snowflakecomputing.com)

- The port is most likely 443

- The database is the one from Step 1

- The username is the one from Step 1

- The password is the one from Step 1

- The schema is the one you would like the tables to be written to

Updated 5 months ago